I got interested in trying to "paint" with light. That is, use long exposure photography with a moving light source to generate images. The concept is simple: if you put a camera on a tripod, turn off all the lights, open the camera shutter and keep it open, move a light source around in front of the camera, then close the shutter, you'll wind up with a single image of the light moving in one continuous stream.

If you carefully choreograph the color changes, motion, and turning on and off of lights via software you can generate some interesting "light paintings".

What started me on this idea was some work we do in my Robotics course in Taubman College at the University of Michigan. Here's a post on that:

Robot Motion Analysis Using Light.

Hardware

My plan for this required a robot to move the lights around, and some hardware to control the light. I'm using an 8 x 8 array of lights:

NeoPixels from Adafruit. This is a really nice unit and only requires 3 pins from the Arduino.

The lights are really bright (understatement) and the programming of them is very easy. The micro-controller hardware which controls the lights is an

Arduino Mega (an Uno would be fine as well) with the

WiFi Shield. The image below shows the beginning of the prototype. As you can see the wiring is very simple (one resistor, one capacitor and the light array):

The robot used is a Kuka Agilus KR-6. I used the back robot in this picture.

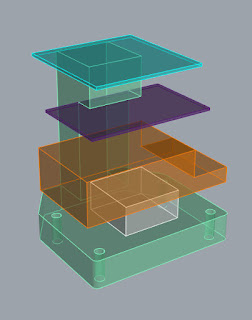

The design of the tool which mounts to the robot looks like this, in model form (pixels, prototyping board, and Arduinos from top to bottom):

The finished tool ready to mount:

And mounted to the robot:

Software

This works using

Grasshopper and

Kuka|prc to control the robot, sample the image, and send the code to the Arduino to drive the pixels.

My first idea was to take any image (photograph, picture of a painting, etc), sample it at points which exactly match the LED light panel pixel spacing, then use that sampled data to illuminate the light grid. Those lights form one small part of the overall image. The robot moves the light array and shows new colors each time.

Single LED light panel (yellow) with 6x6 grid of robot moves (blue):

Sampled points in the image (these are the lower left corner of the LED light panel).

Robot moves in a 6x6 grid with different colors each time:

All the coordination of motion and data is managed through Grasshopper. Here's the definition - as you can see it's pretty simple - not many components. The light blue is the robot control. The light cyan generates the Arduino code:

The Grasshopper definition lets you generate a preview of what the pixelated image will look like with baked Rhino geometry. Here are a few examples:

Van Gogh's Starry Night:

A Self-Portrait:

The robot sequence is this:

- Move to a new position.

- Turn on the lights.

- Pause for a bit to expose the image.

- Turn off the lights.

- Repeat until the entire image is covered.

My first pass at this loaded all the color data, for every move, into the Arduino memory at the start. The very low memory capacity of an Arduino, even a Mega, made only 30 or so moves possible. I wanted more than that, so I changed to using a Arduino WiFi Shield and transmit the color data to the tool at each motion stop point. This places no memory limit on the size of the area that's covered.

Interfacing with the PLC

A robot has something called a Programmable Logic Controller (PLC). This is what allows the robot to interface to external inputs and outputs. Some example inputs are things like limit switches, and cameras. Outputs are things like servo motors and warning lights.

A program can also use the PLC to find out about the state of the robot - the current position and orientation of the tool, joint angles, etc.

For this project the PLC is used to track when the robot is moving. This is done by having the robot program set a bit of memory in the PLC to indicate the robot has arrived at a new position. Then it waits for a short amount of time (as the lights are turned on). Then that memory bit is turned off.

The Grasshopper definition monitors the PLC and informs the Arduino when to turn on and off. It also sends the new color data over WiFi.

First Test Images

The first attempts were interesting. It proves the concept works - which was very exciting to see for the first time. But they also clearly show there is room for improvement!

Here's the gradient example. I cropped this image wide so you can see the context - robot workcell, robot in the background, window behind, etc. The robot moved the light array 36 times to produce this image. So that's 36 moves times 64 pixels per move or 2304 pixels total. The tool was slightly rotated which results in the grid varying a bit. That's an easy fix - but it's interesting in the image below because it makes it very clear where each move was.

Here's a self portrait. You can see a variation in the color intensity between the moves of the robot. I realized this is because the robot is not staying in each location for the same time. That's because the robot checks if it should move every 200ms. And the exposure time is only 500ms. So when the robot is triggered to move early the exposure can be nearly 40% different. This is also an easy fix - I'll just sample every 10ms. The PLC also supports interrupt driven notifications - which would be even better.

Here's a portion of Vermeer's Girl with a Pearl Earring. This image again shows the variation in intensity. It also shows where colors get very dark the variation in RGB intensity of the pixels can become an issue. Amusingly she has red-eye! Obviously in all the images more pixels are needed to make them look good. That's accomplished with more robot moves but also with moving between the pixels. It's possible to quadruple the resolution of each of these images by simply moving into the space between the pixels and update the color values.

Van Gogh's Starry Night. With many more pixels this could be a nice image. It would also be interesting to experiment with moving the robot back a foot or so, reducing the intensity, and sweeping the robot with the lights on but the colors changing. So you'd have an overlay of the two. You could get a layered look, and a depth, and a sense of motion.

Next Steps

I'll be doing more with this in the future. One goal is to see if I can make

Chuck Close style images. Here's an example of one of his self portraits:

His work is much, much more interesting (

more examples are available here). Each cell in the image is multi-colored. Also the rectangular array of cells is rotated 45 degrees rather than vertical and horizontal. His cells are also not all circular - but elliptical and triangular. My plan is to put the correct color in the center of each cell. Then generate a new color and move that in a circle around the center point. His cells have 3 or 4 colors each, and also cross the cell boundaries as he sees fit. That I cannot do. But it'll be interesting to see how far I can get.

It's also possible to leave the LEDs on and move the robot. Or move the robot through space as to generate 2D images of painting in 3D. I'll be experimenting with many techniques (software changes) and I'll have another updated post in the future.

Other Methods

See this post for some other experiments I've done:

Robotic Painting with a Line of Lights.